Upgrade your AI with

High-Signal Training Data

and Robust RL Gyms

Collinear delivers measured improvements in real-world tasks for frontier models and enterprise AI agents.

Production ready agents,

backed by real customer results

lower GPU costs with

trimmed dead end runs

at F500 enterprise

higher accuracy on

domain-specific tasks

at leading AI lab

speedup with efficient

context management

and filesystem

Data + environments

for production-ready agents

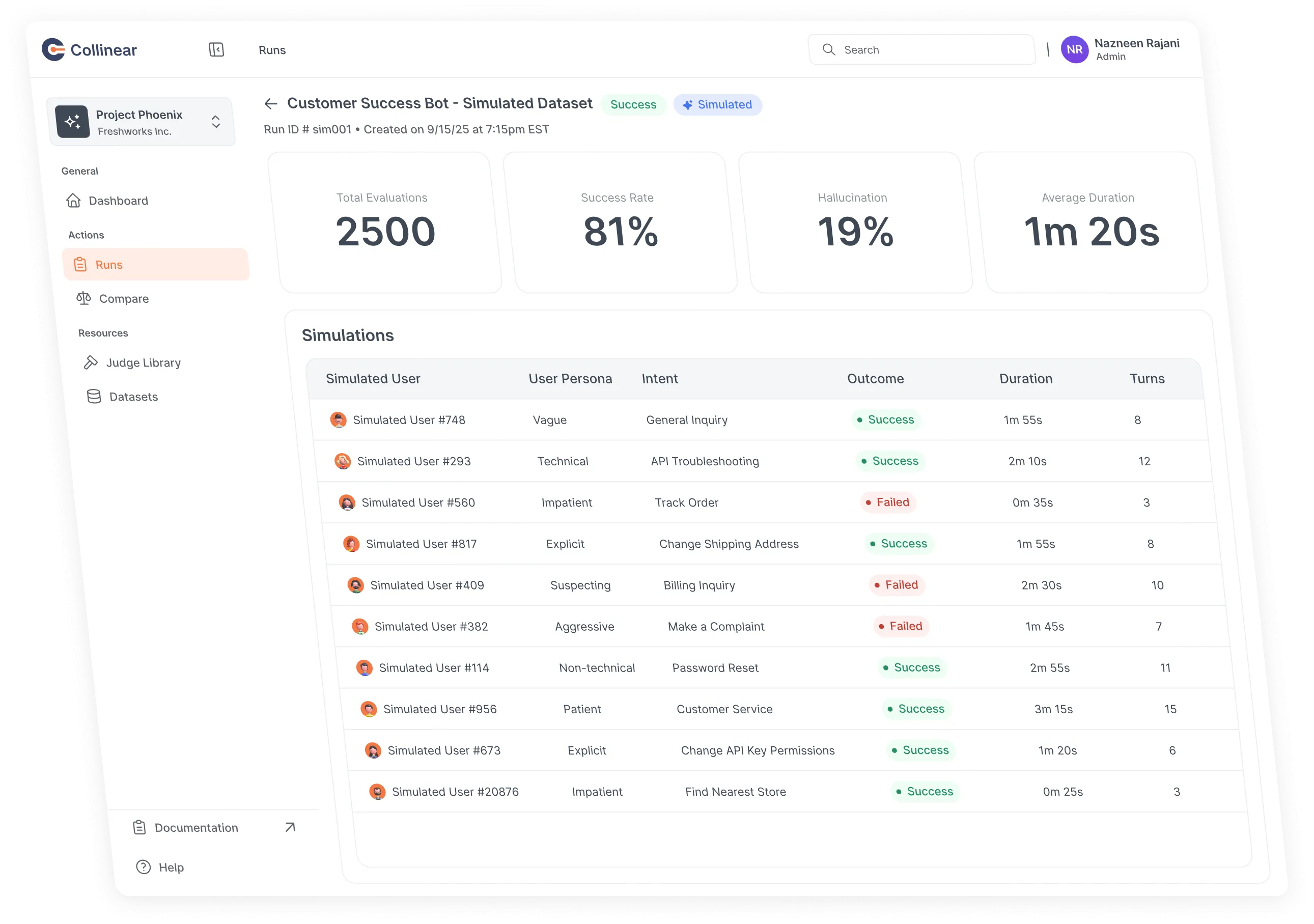

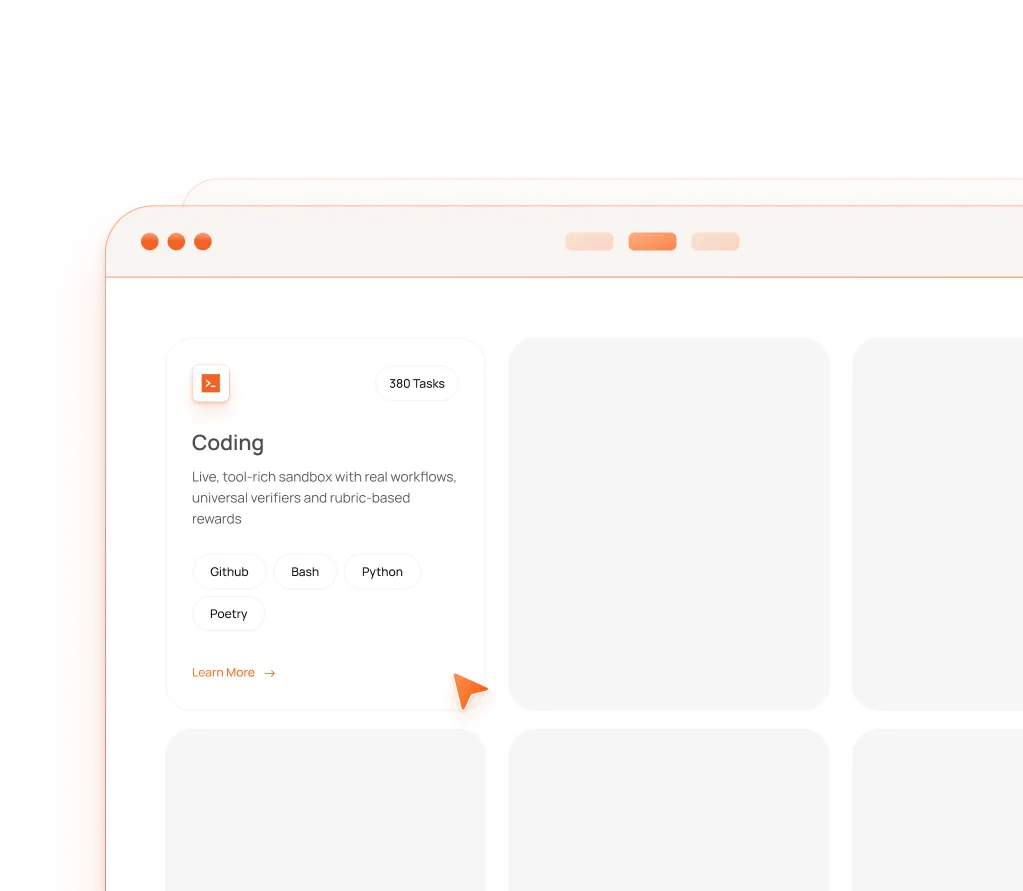

Instantly simulate

million of user interactions

Move beyond static benchmarks with real workflows, universal verifiers and rubric-based rewards, so you can train agents that actually get work done.

Curated data guaranteed

to boost performance

Collinear datasets are carefully curated by our AI researchers and benchmark validated across STEM, general capabilities, and domain-specific tasks.

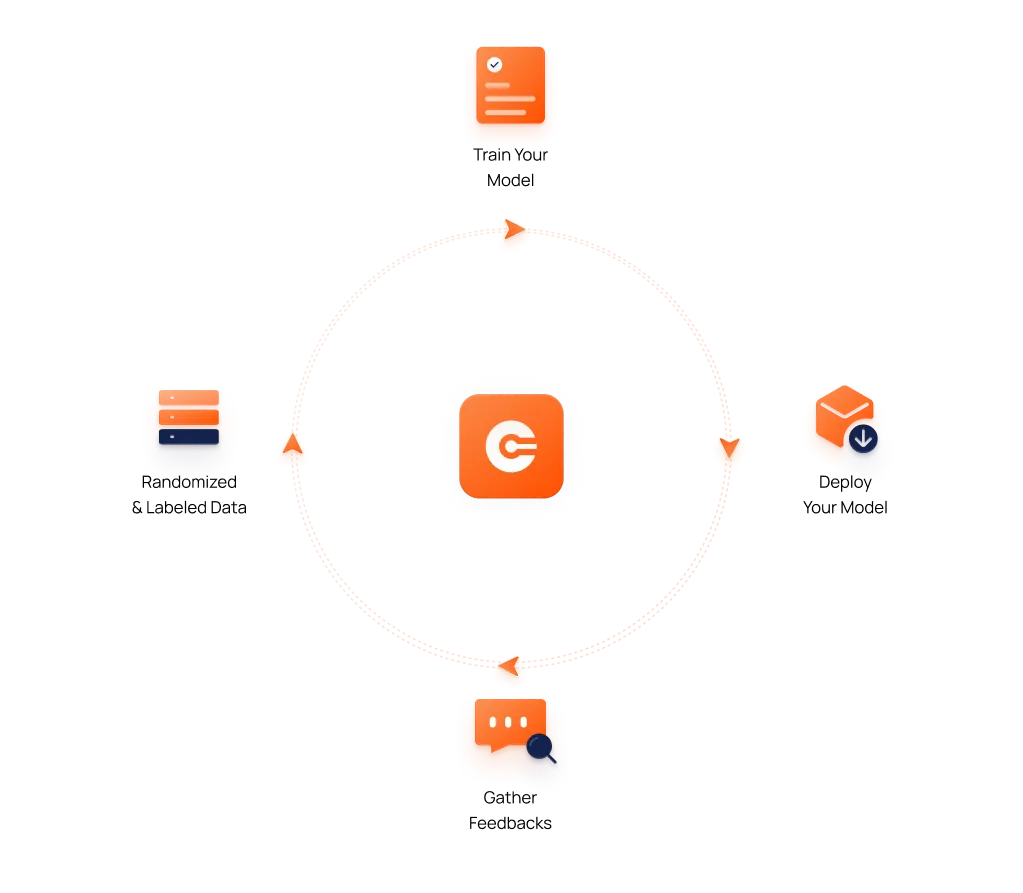

Continuously

improve at scale

Further improve your AI in production with domain-specific, scalable data pipelines, custom-built by our AI researchers.

Customers ship better agents faster with Collinear.

See how leading enterprises get to deployment

with confidence, control and trust.

$10M+

saved in compute spend through targeted data curation

96%

F1 score on reliability labels used to curate high signal training data

“Our partnership with Collinear is already driving business results. 91% of AI-generated responses showed significant improvement, leading to faster resolutions and better customer experiences.”

"Collinear’s quality judges were instrumental in launching MasterClass On Call, our latest product delivering AI-powered wisdom from world’s best pros."

10k+

multi-lingual novel jailbreak modes discovered

15% increase

in visitor-to-first-visit conversion after optimizing the sales copilot with Collinear evaluation data and real estate sandbox environment

Don’t fall behind in the AI race.

Get ahead with Collinear for better AI from development to production.